Vertex Animation Texture( abbr. VAT ) is a technique that used in the development scene by various video games recently. The way to animate mesh is not updating local position from morph data that stored in mesh, but retrieving data from decode a Texture which baked mesh’s local XYZ position to RGB channel in each pixel, and that works almost at GPU.

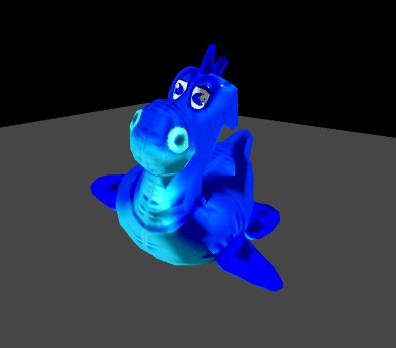

So, I will introduce the way VAT running on WebGL with Three.js the major JavaScript 3D library, and Houdini 3D procedural software for modeling.

Target

WebGL 1( OpenGL ES 2.0 )

requirements

TypeScript 3.8.3

Three.js r117

Houdini Indie 18.0.532

browsers

Chrome 84

FireFox 79

Safari(iOS) 13.4.1

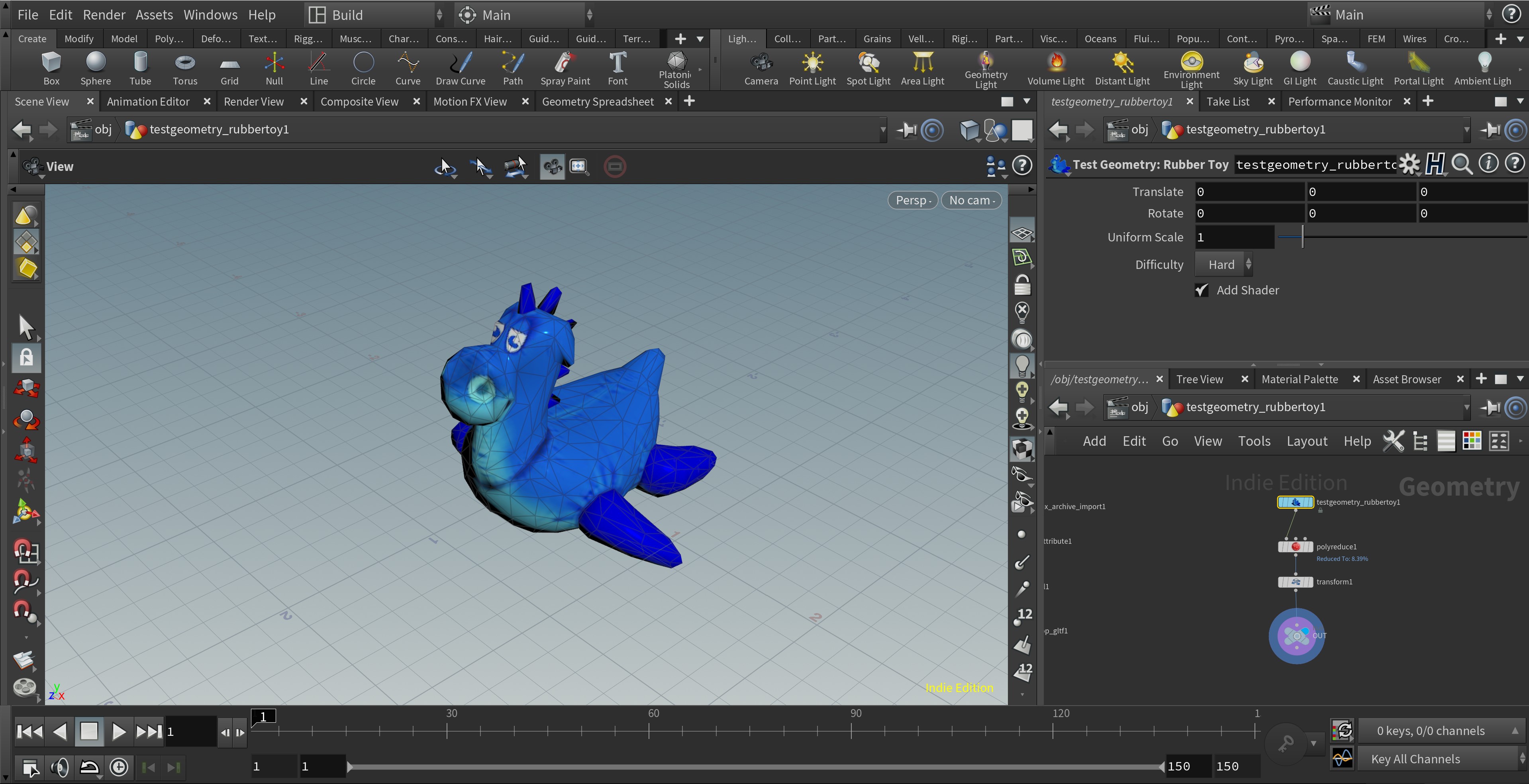

At frist, let’s start recording animation in Houdini, however I will not lecture for modeling and rigging in detail due to be out of scope about this article.

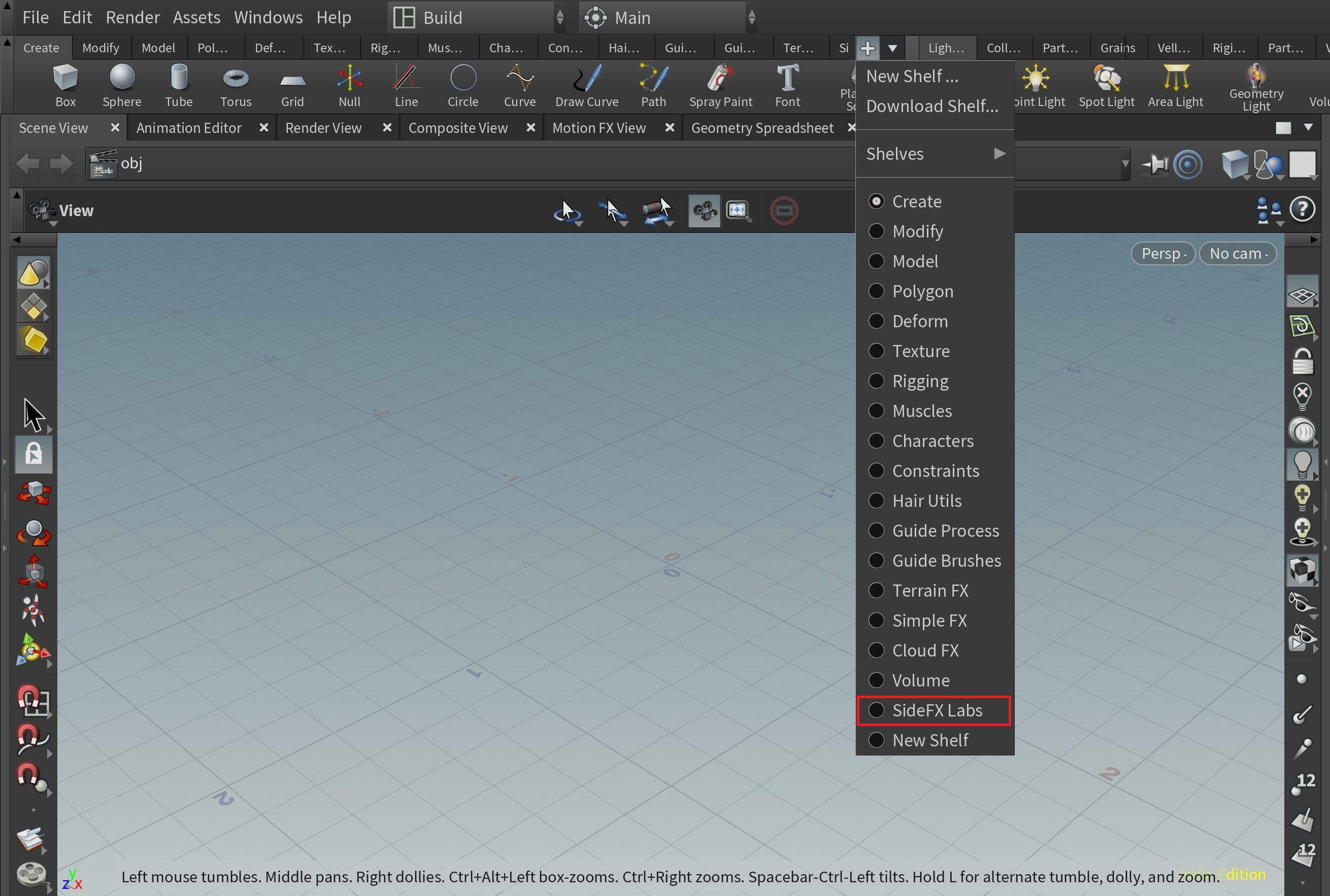

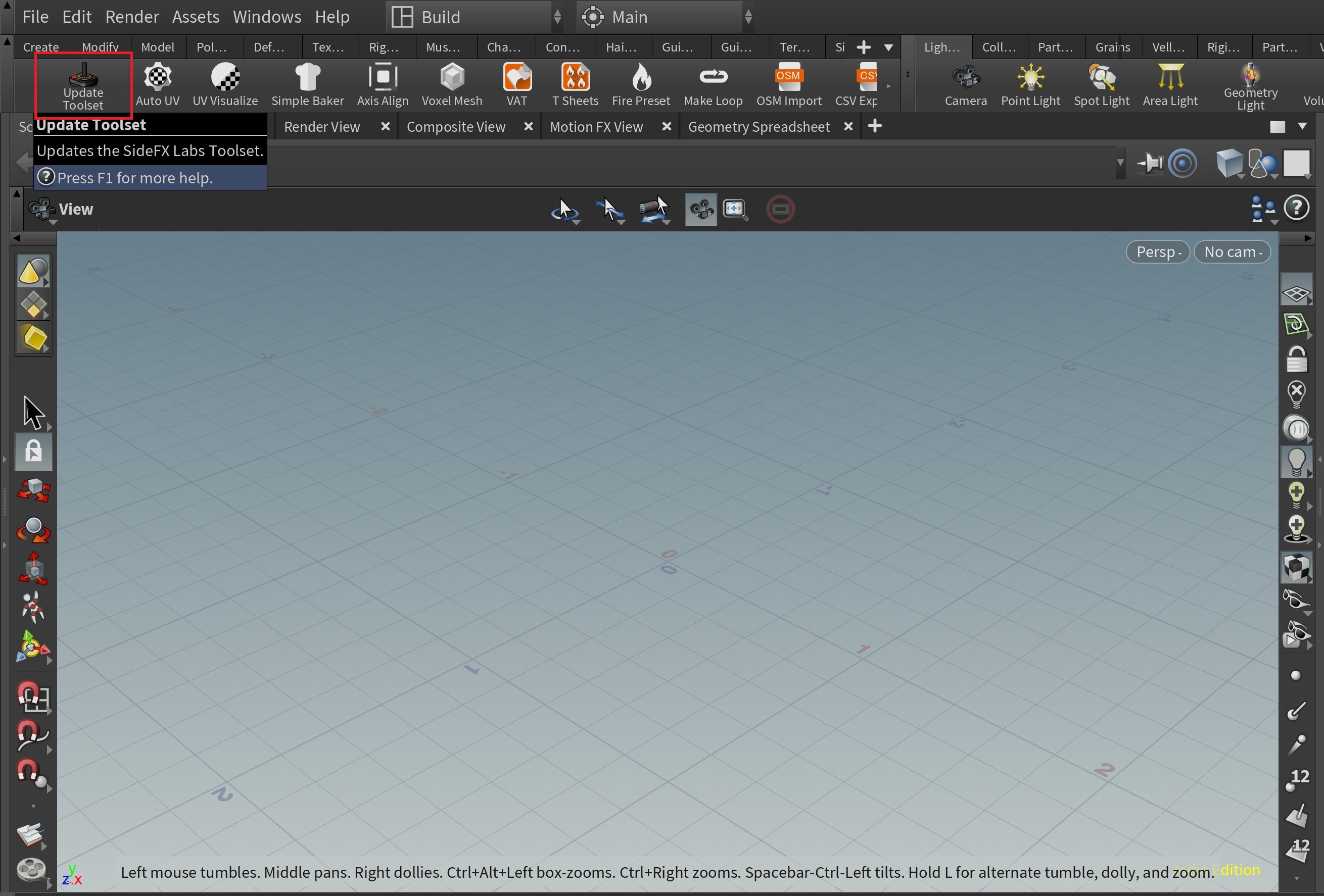

Enable SideFx Labs shelf

Modeling…

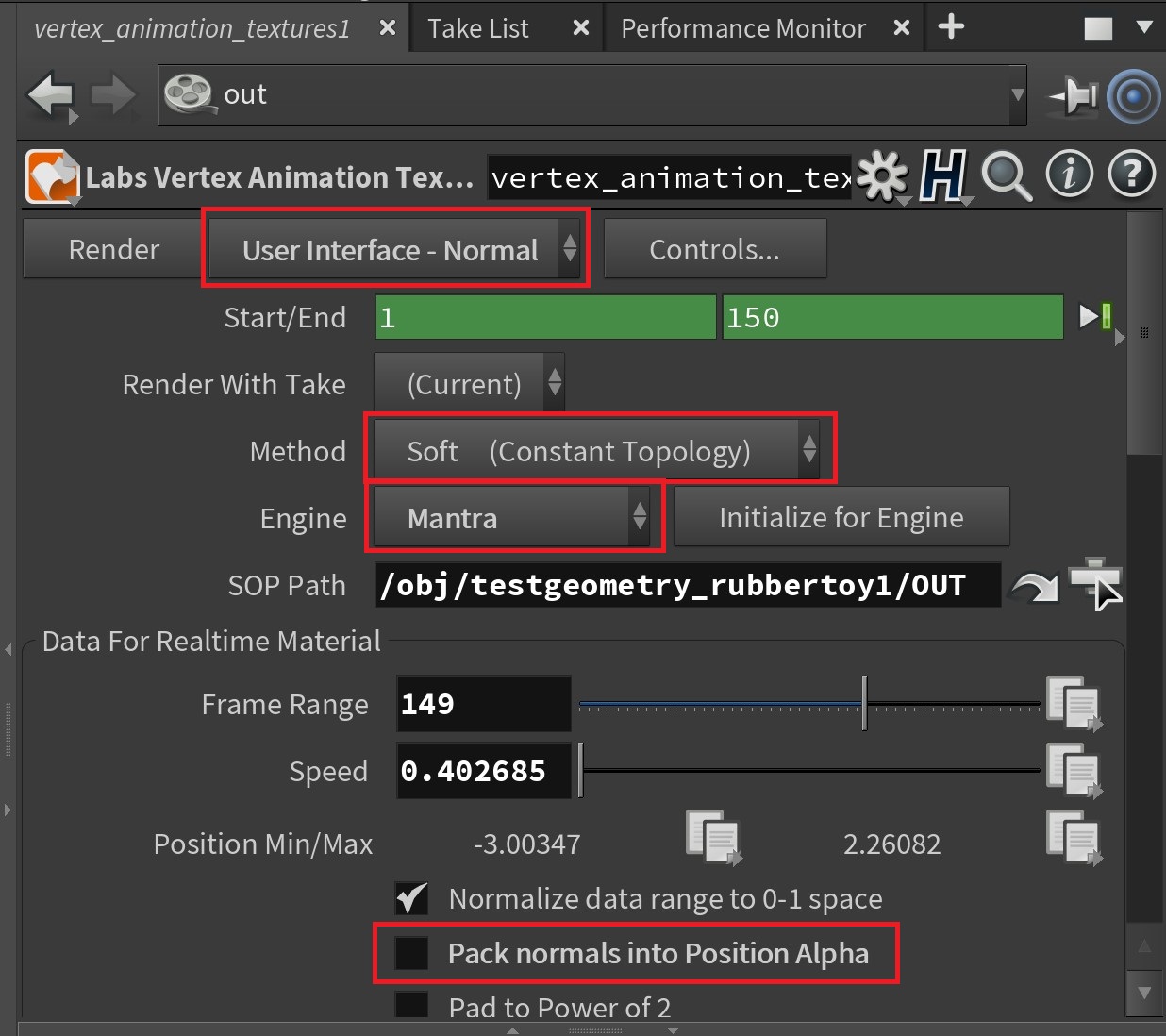

Export VAT

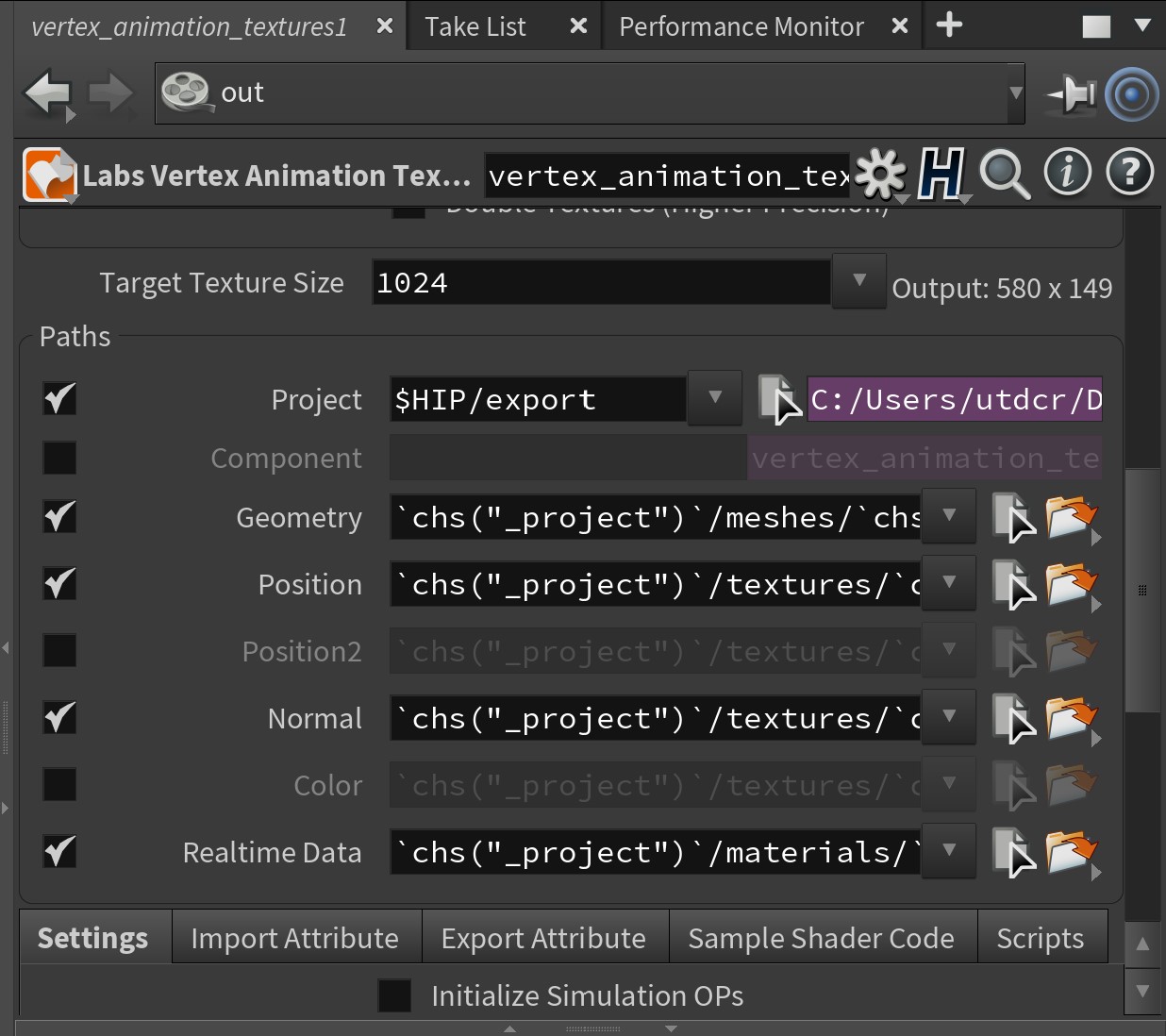

Texture size

You should keep mesh’s Points( indices ) length less than maximum texture size that your assuming render target devices, because of each position of Points per pixel is recorded to texture horizontally, and you will realize it baked per frame vertically. Maximum texture size is 4096 * 4096 pixels which adequate almost devices both mobile and PC if you want to run your application with VAT these days.

Supporting 4096×4096 textures on different devices

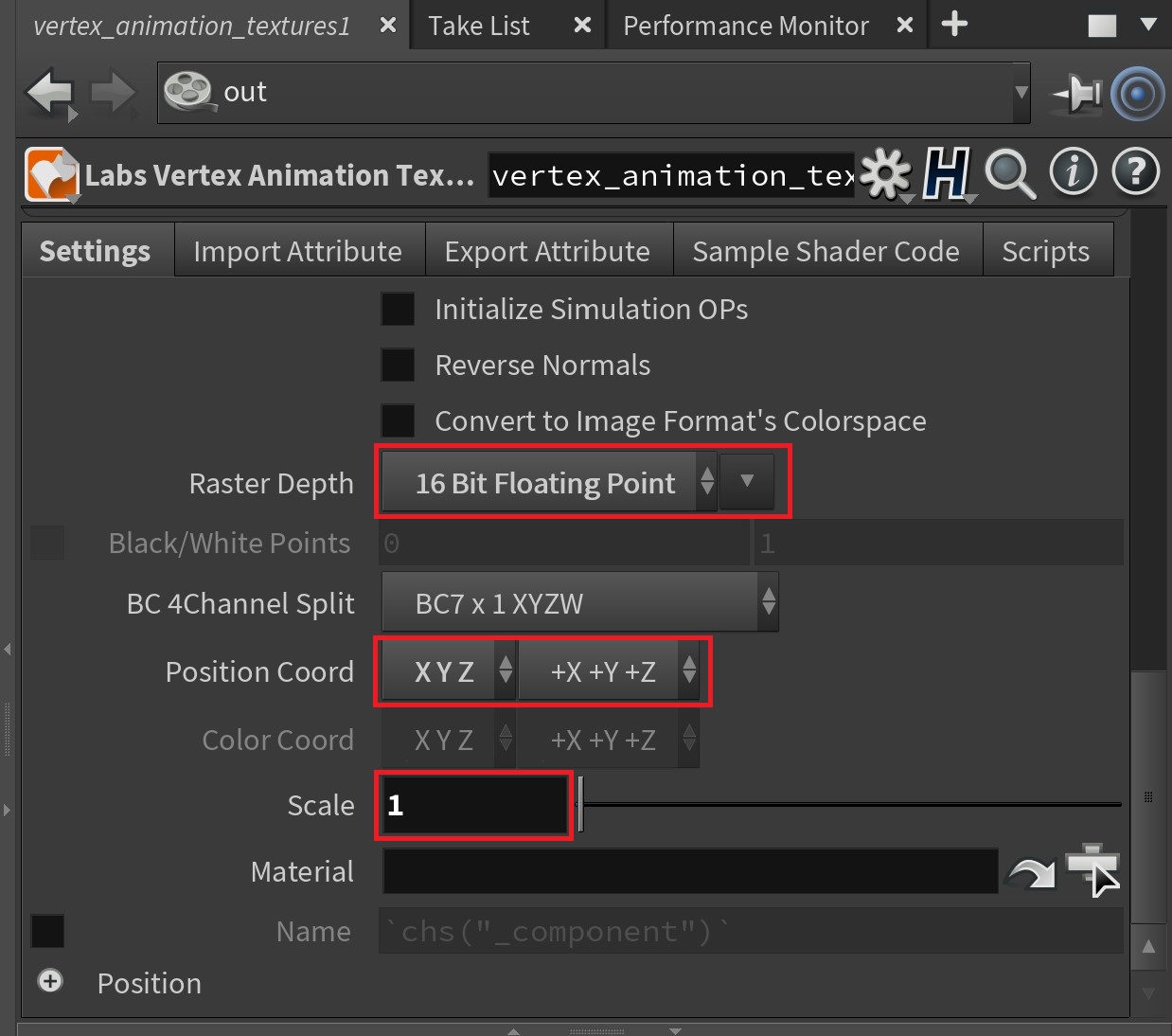

Texture precision

We export texture as exr format, it will load with EXRloader, a module of Three.js.

It seemed that float( 32-bit ) exr texture which exported from VAT failed to read. But half float it passed to load( issue exists… ) I think half float is enough for almost case.

Normal

You can see an option “Pack normals into Position Alpha” in VAT menu, it means you must decode a float to three vectors at vertex shader. I tried below code which is defined on “Sample Shader Code” tab when you choice “Engine” option to Unity( I rewrited it HLSL to GLSL ), however it was far from fine quality. You should export normal texture and compress to 8-bit png which has much quality at almost case for now.

vec3 decodeNormal(float a) {

//decode float to vec2

float alpha = a * 1024.0;

vec2 f2;

f2.x = floor(alpha / 32.0) / 31.5;

f2.y = (alpha - (floor(alpha / 32.0)*32.0)) / 31.5;

//decode vec2 to vec3

vec3 f3;

f2 *= 4.0;

f2 -= 2.0;

float f2dot = dot(f2,f2);

f3.xy = sqrt(1.0 - (f2dot/4.0)) * f2;

f3.z = 1.0 - (f2dot/2.0);

f3 = clamp(f3, -1.0, 1.0);

return f3;

}

Decode from position texture’s alpha

Decode from normal texture

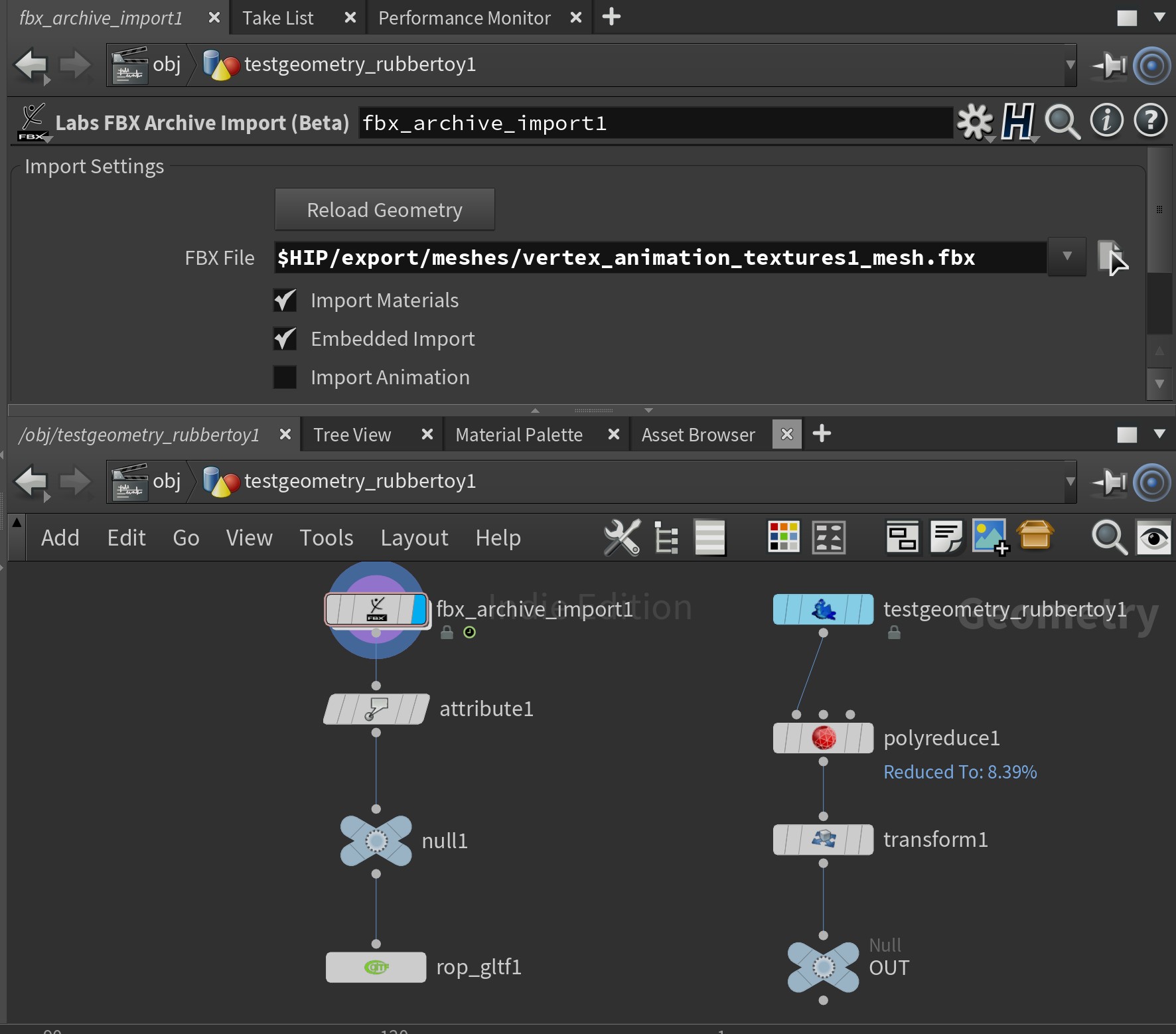

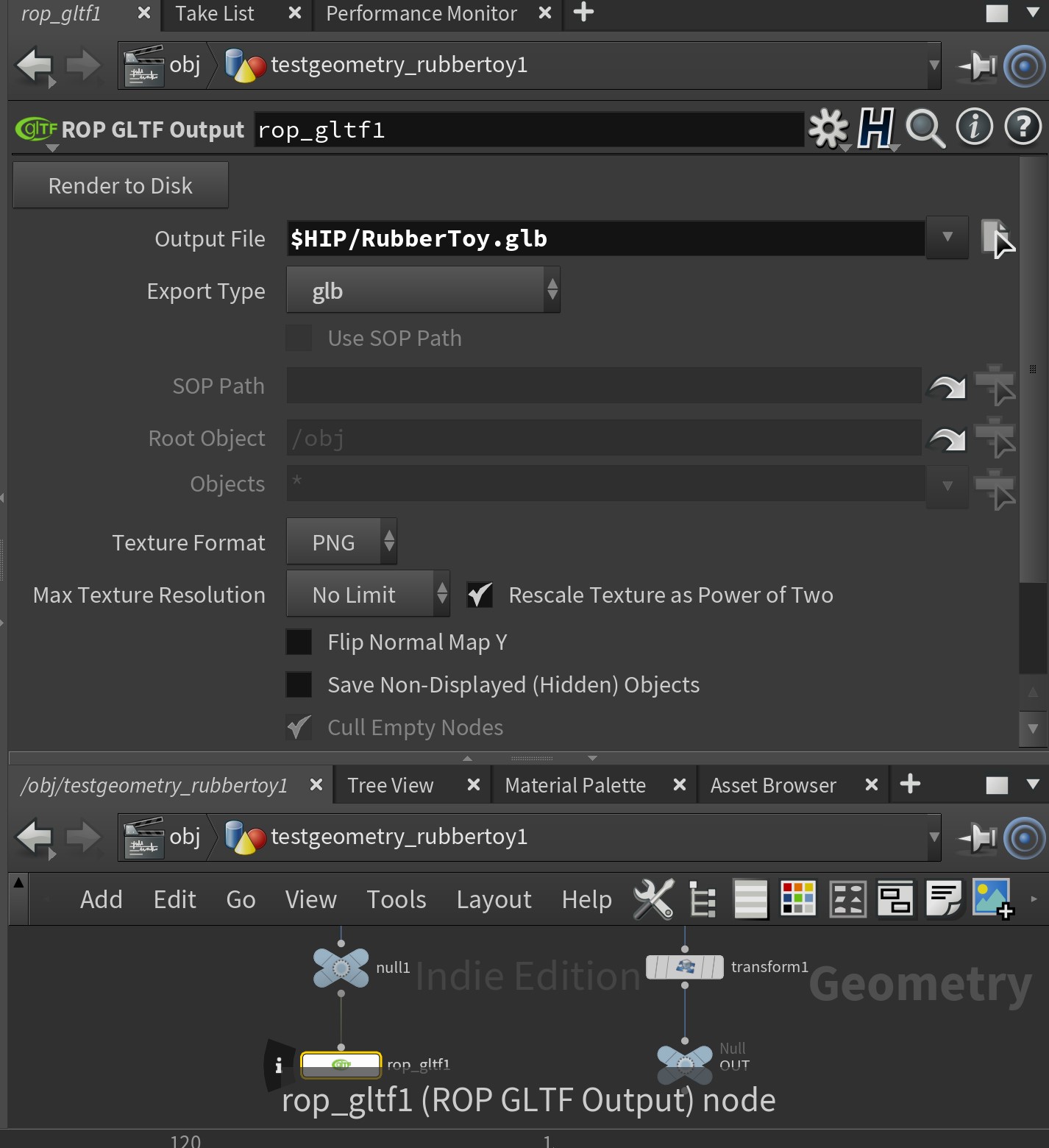

FBX to glTF

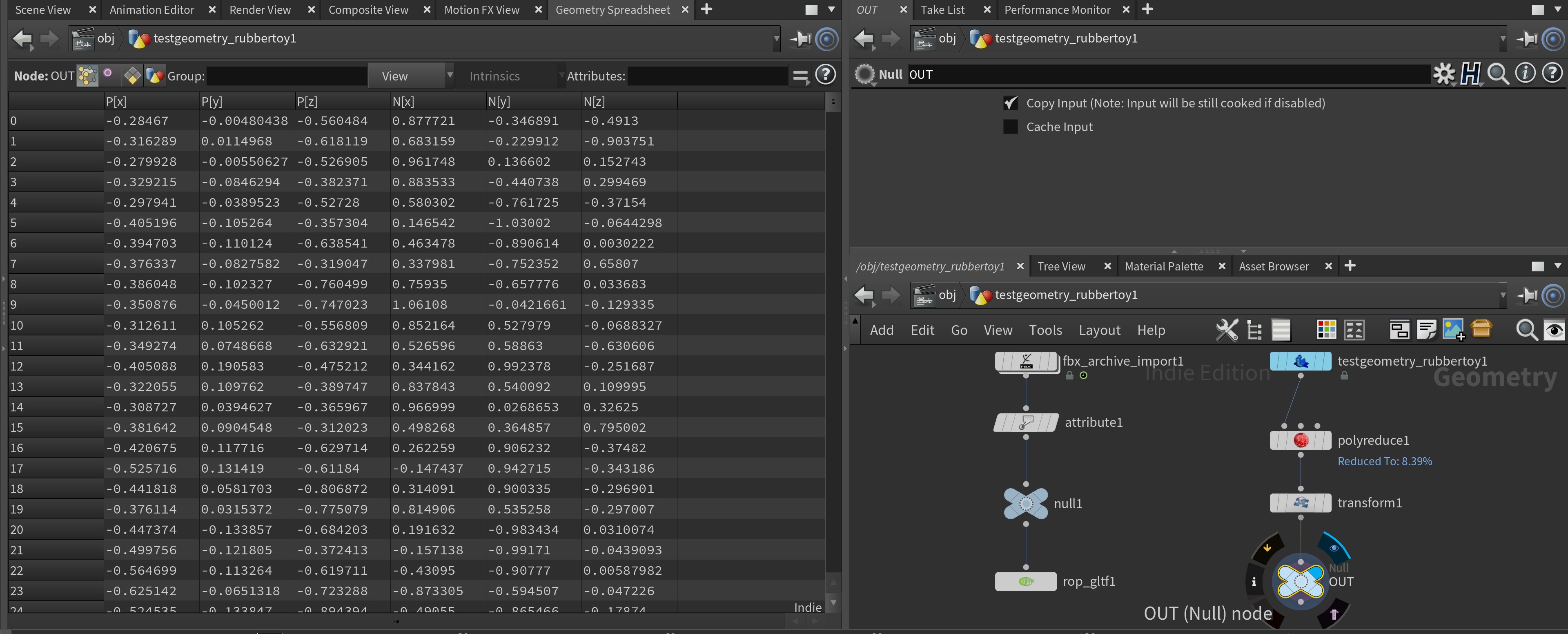

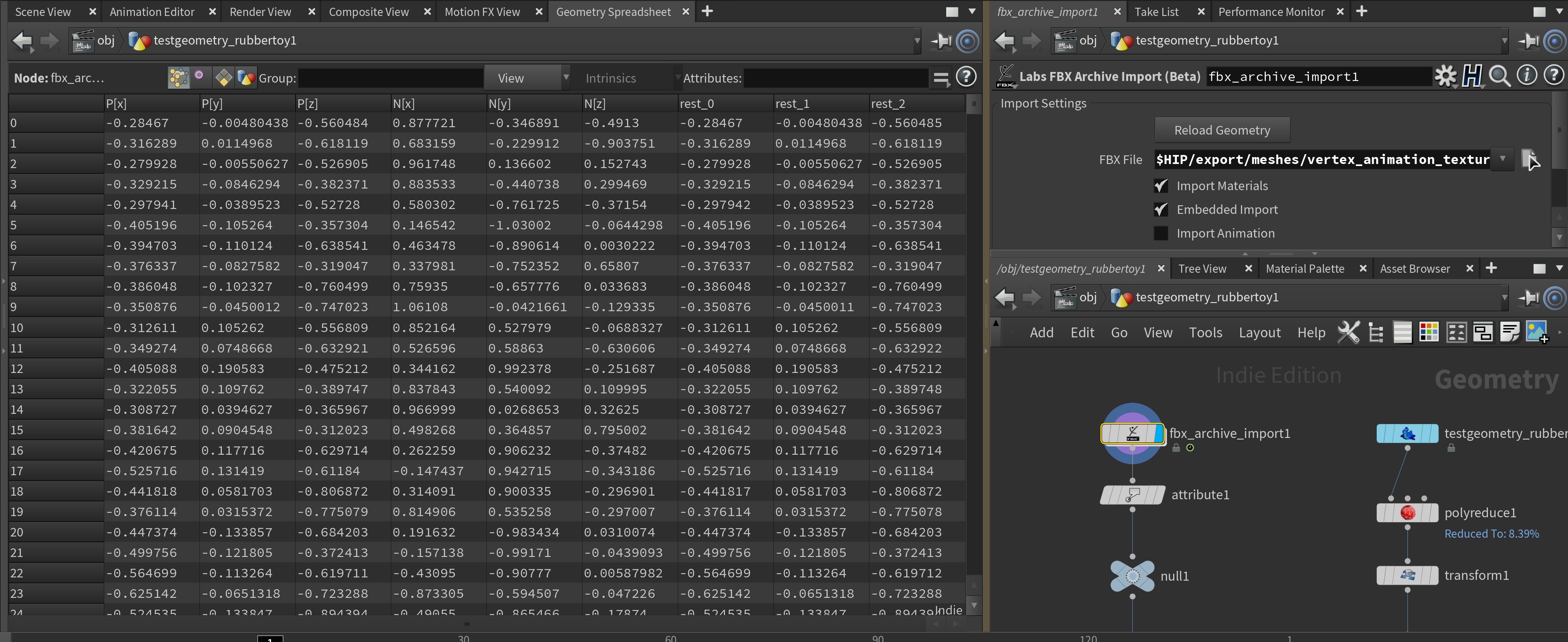

You exported mesh( fbx ), json and texture( exr ). And we have a last mission in Houdini that is converting FBX mesh to glTF format for WebGL.

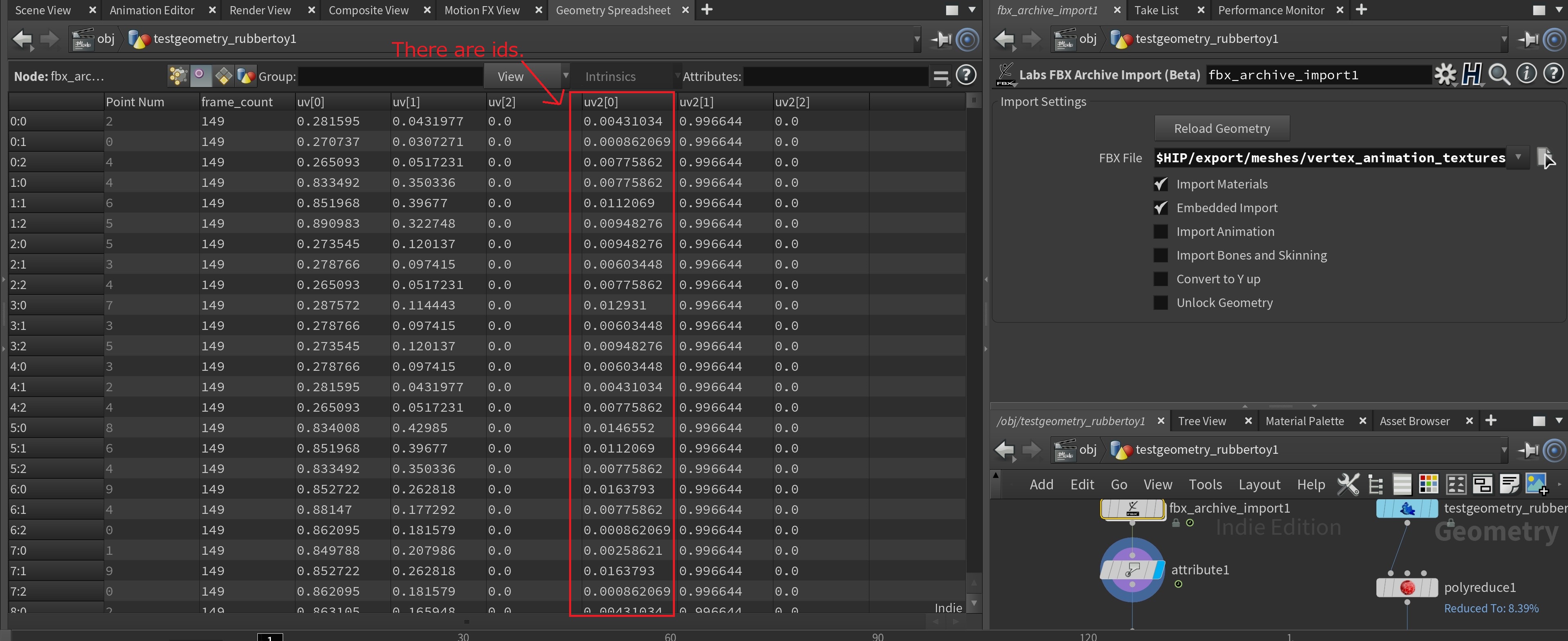

Exported mesh contains necessary data for retrieving animation in vertex shader, for instance a float in the two vectors “uv2″( uv2.x ) has “id” of sampling each pixels correctly.

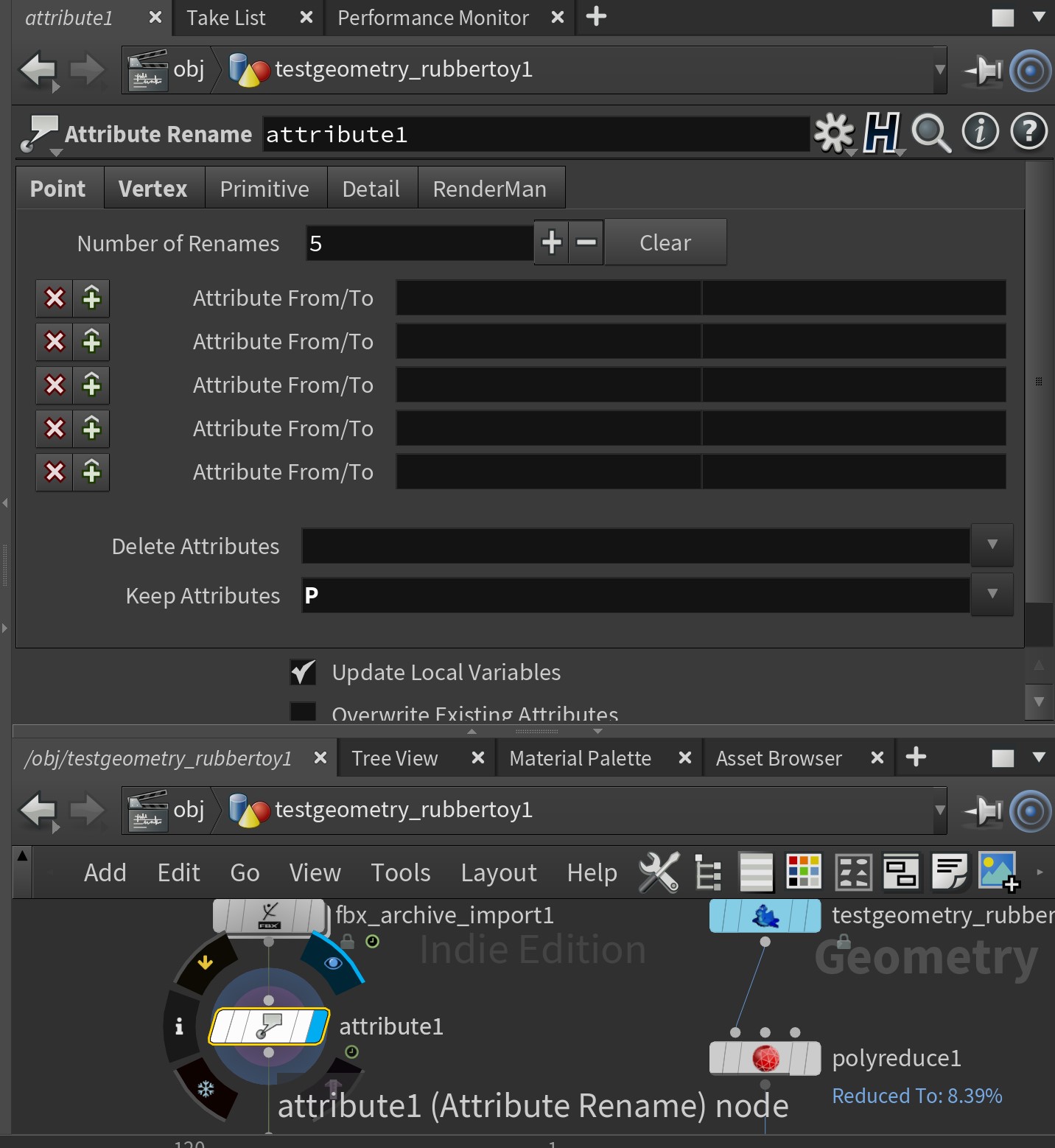

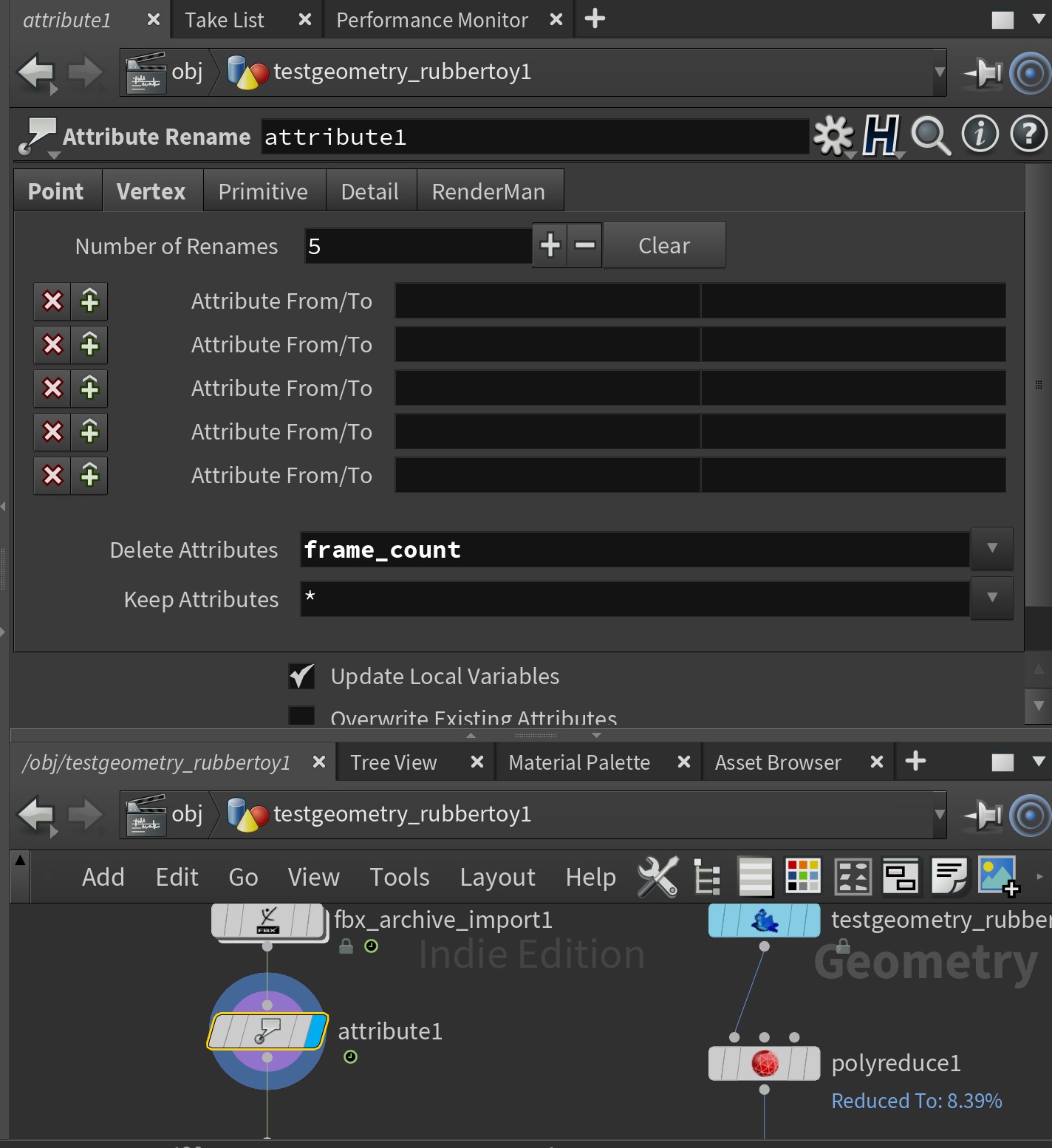

Some unnecessary data also contains in FBX, so we will remove it by Attribute Rename node before it export as glTF.

CPU Code

Load exr texture with correct settings.

import { EXRLoader } from 'three/examples/jsm/loaders/EXRLoader';

const loadVATexrTexture = async (url: string) => {

const loader = new EXRLoader();

return new Promise((resolve: resolveTexture, reject) => {

loader.setDataType(THREE.HalfFloatType).load(url, texture => {

// texture as float value

texture.encoding = THREE.LinearEncoding;

// get correct float value

texture.magFilter = THREE.NearestFilter;

// disable mipmap

texture.generateMipmaps = false;

resolve(texture);

});

});

};

References

Three.js Textures

テクスチャパラメータ

Set uniform variables to shader.

import data from '../../dist/assets/model/VAT/data.json';

const VATdata = data[0];

// function loadShaders is original

const shaderData = await loadShaders([

{ key: 'vertex', path: './assets/shaders/shader.vert' },

{ key: 'fragment', path: './assets/shaders/shader.frag' },

]);

const positionMap = await loadVATexrTexture('./assets/model/VAT/position.exr');

// 8-bit png converted from exr

// function loadTexture is original

const normalMap = await loadTexture('./assets/model/VAT/normal.png');

normalMap.encoding = THREE.LinearEncoding;

normalMap.minFilter = THREE.LinearFilter;

normalMap.magFilter = THREE.NearestFilter;

const uniforms = {

positionMap: {

value: positionMap,

},

normalMap: {

value: normalMap,

},

// set bounding box for correct scale

boudingBoxMax: {

value: VATdata.posMax,

},

// set bounding box for correct scale

boundingBoxMin: {

value: VATdata.posMin,

},

// total animation frame

totalFrame: {

value: VATdata.numOfFrames,

},

currentFrame: {

value: 0,

},

};

const material = new THREE.ShaderMaterial({

uniforms: uniforms,

vertexShader: shaderData.vertex,

fragmentShader: shaderData.fragment,

});

Send currentFrame uniform variable per frame to shader.

let camera: THREE.PerspectiveCamera;

let scene: THREE.Scene;

let renderer: THREE.WebGLRenderer;

let currentFrame = 0;

const animate = () => {

requestAnimationFrame(animate);

currentFrame += 1;

material.uniforms.currentFrame.value = currentFrame;

if (currentFrame === Math.floor(mesh.material.uniforms.totalFrame.value)) {

currentFrame = 0;

}

renderer.render(scene, camera);

};

vertex shader

There are some built-in uniforms and attributes which is inserted by Three.js automatically when it is compiled.

varying vec2 vUv;

varying vec3 vViewPosition;

varying vec3 vNormal;

attribute vec2 uv2;

uniform float boudingBoxMax;

uniform float boundingBoxMin;

uniform float currentFrame;

uniform float totalFrame;

uniform sampler2D positionMap;

uniform sampler2D normalMap;

float boundingBoxRange = boudingBoxMax - boundingBoxMin;

void main() {

float pu = uv2.x;

float pv = 1.0 - fract( currentFrame / totalFrame );

vec2 shiftUv = vec2( pu, pv );

vec4 samplePosition = texture2D( positionMap, shiftUv );

samplePosition *= boundingBoxRange;

samplePosition += boundingBoxMin;

vec4 outPosition = vec4( samplePosition.xyz , 1.0 );

gl_Position = projectionMatrix * modelViewMatrix * outPosition;

vUv = uv;

vec4 mvPosition = modelViewMatrix * vec4( outPosition.xyz, 1.0 );

vViewPosition = - mvPosition.xyz;

vec4 texelNormalPosition = texture2D( normalMap, shiftUv );

texelNormalPosition *= boundingBoxRange;

texelNormalPosition += boundingBoxMin;

vNormal = normalMatrix * texelNormalPosition.xyz;

}

That’s all.

And you have currentFrame variable in shader, so you can control anything per frame, for instance opacity, color or slo-mo, smoothing animation with mixing frame, reverse animation and instancing…

References

Shader graph: Rigid body animation using vertex animation textures

Texture Animation: Applying Morphing and Vertex Animation Techniques

VAT (Vertex Animation Texture) on Unity HDRP examples

Case Study of Lusion by Lusion: Winner of Site of the Month May