This article is an introduction of a paper “Phase Vocoder Done Right” that indicates the possibility of improving quality for audio signal time stretching.

The reason why I got to the paper is…?

I’m a programmer mainly client-side of web, and I enjoyed various API implemented by Web Browser. Then, I really questioned that Web Audio API not implement audio signal time stretching(except HTMLMediaElement.playbackrate) while it provides so many highly abstracted audio processing filters and more. So, I just started a challenge for the journey of audio signal processing.

The algorithm called “time-domain pitch synchronous overlap and add (TD-PSOLA)”, I implemented it that is not so bad quality unless it needs massive calculation when we have great machine resources these days. And I also found the algorithm “Phase Vocoder“, it’s known to take the method of calculation in frequency domain, however, I felt that was not a good enough quality when I implemented it. So I restarted to seek out better algorithm, finally I reached the very paper.

In short, the paper insists that historical algorithms of phase vocoder (PV) which lose quality that compared to original signal before time stretching. Furthermore, it causes side effect of echoing. So, the paper focuses phase reconstruction with considering phase integration between frames. While I learned about basics of signal processing in audio, the history which human sense of hearing was not so keen about phase (from On the Sensation of Tone), however recent research of audio synthesis is to put considerable emphasis on phase.

The paper introduces about the mathematical model, a sample code which is written in LaTex, how the algorithm works, and comparing the result with classical PV and some audio software. I’m not the author and there is no working code that written in any programming language, therefore I must assume a real code which is solved with the mathematical model, variables and support texts that there’re scattered in the paper.

And this algorithm is based on classical PV, so I think that the iteration of the calculation is as follows.

- windowing to normalized input signals with frame size(window size)

- Fast Fourier transform(fft) to the buffer

- convert magnitude and phase from polar form of complex number

- there are additional calculations that proposed by the paper

- convert plane form of complex number from magnitude and phase

- Inverse Fast Fourier transform(ifft) to the buffer

- re-windowing the buffer

- store the signals to the output buffer

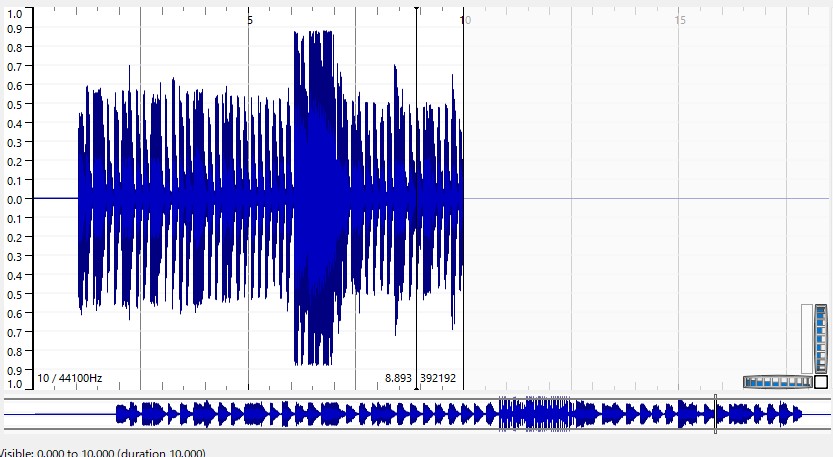

Thus, I started to collect the information of step 4 from the paper, but it was too complex to tell the explanation, so I recommend you to compare the paper with my repository. And I will show you the result of my code as follows. I chose a wave file for input from this song due to it has low frequency well.

original 10 seconds of the song intros, 44.1kHz, 16bit, monaural

time stretch ratio 2.0 (20 seconds)

time stretch ratio 0.5 (5 seconds)

time stretch ratio 0.8 (8 seconds)

time stretch ratio 1.2 (12 seconds)

Perhaps, my implementation is not exactly the same which is compared to the original implementation(a sample application pvdoneright.zip can be test your local files by downloading it), but it’s not so far from that I heard, except for that can observe clipping in 1.2, and less amplitude in 0.5 and 2.0. In addition, I also implemented about pitch shifting below.

pitch shift ratio 1.3 (10 seconds)

This algorithm is not suitable for voiced monophonic speech signals, but its not suffer from the typical phase vocoder

artifacts even for extreme time stretching factors which said in the paper. And, I think that this approach can adopt for specified use with improvement of some tiny problems. If you have interested in my program, feel free to check it out, and I welcome feedback from you via issue or the other.

https://github.com/Hajime-san/phase-gradient-vocoder

Finally, this challenge of implementation is my first appearance that guessing from a paper to real code, it was so tough for me even excluding that I haven’t took a course in audio engineering. But it also gave me a lot of experiences like reading the mathematical model, symbol and audio signal processing.